If you read our previous blog post, you are aware that equipping your company AI applications with a Retrieval Augmented Generation (RAG) system comes with real benefits, such as leveraging your knowledge base, literally talking to your data, ensuring contextualized and informed answers to your queries, enhancing transparency and, more importantly, gaining control over who gets access to your data.

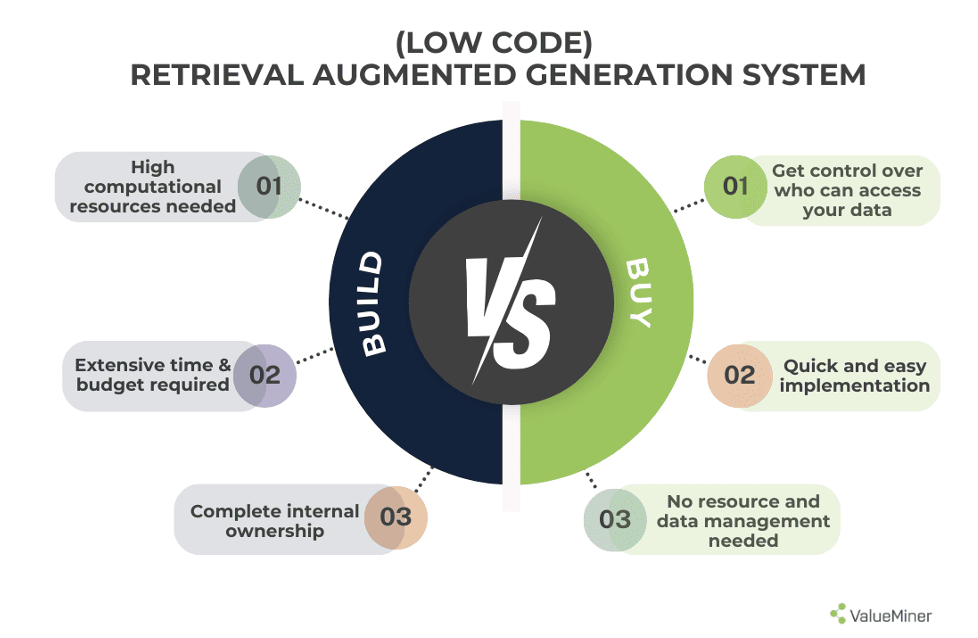

However, when it comes to implementing RAG, as a decision-maker you are faced with a critical decision: should you build your own RAG system within your company, or buy AI business solutions that offer RAG integration?

In this article we will explore both options. You will learn about the advantages and challenges of building a RAG system in-house versus buying low code solutions like ValueMiner RAG system.

Our analysis will empower you to make the best decision for your company, that aligns with your strategic goals, budget and resources, regardless of your business size and industry.

How to build your own Retrieval Augmented Generation (RAG) system in-house: our hands-on experience

To build a Retrieval-Augmented Generation (RAG) model from scratch you must follow four steps:

- Build the frontend: the graphical user interface where the user can interact or chat with the LLM, upload documents, and more.

- Build the backend: a system that understands and executes the requests of the user.

- Set up the LLM: the brain of the RAG system, or data storage.

- Set up the server and hardware resources: where the LLM runs.

There are several open-source projects that can be used to set up the frontend and backend of your Retrieval-Augmented Generation (RAG) model. Our selection of options was between:

- OpenAI PrivateGPT that offers complete solutions including model loading, software/API interface, vectorization of docs, and more,

- Ollama + WebUI that run LLMs on servers and provide graphical user interfaces,

- and Huggingface Chat UI, an open-source chat interface with support for tools, web search, multimodal and many API providers.

All these products offer both front and backend and provide detailed documentation on how to install the LLM and connect it to the software.

As for the LLM, you can either opt for a pre-trained LLM model or train your own LLM with your specific data.

The characteristics of your server and computing resources depend on the scale and complexity of the RAG model you want to develop. Ideally, your server should be designed for AI. Make sure your server has sufficient computing power, memory, and storage capacity to handle the LLM and your datasets. You can improve your computing performance by using graphic cards, chipsets, and RAM, which should be at least twice as large as the LLM. We had a very positive experience with the Metal API on Apple devices.

Must-have skills needed to build a Retrieval Augmented Generation system in-house:

To set up your Retrieval-Augmented Generation (RAG) system internally, you should be able to count on the following three skills within your team:

- Python proficiency: Python is the AI language for AI developers worldwide. Knowing Python is key as almost all components related to LLMs and open-source projects with the topic of AI are written in Python. As well, basic knowledge of Python is required to program the interface between the front end and back end.

- Command line expertise: To set up the three main components (frontend, backend and the LLM) of your Retrieval-Augmented Generation RAG system, and customize its functionalities, your development team must be able to use the command line of your operating system.

- Database and server administration management: Depending on the complexity of the system you want to build you need to consider this third set of skills. A simple RAG system can be set up with basic knowledge of web development and application development. However, more complex models might require additional IT infrastructure skills, or cooperation with external developers.

Benefits and challenges of building a Retrieval-Augmented Generation RAG system internally:

For those businesses with the required technical resources and skills in-house, setting up a RAG system from scratch in-house offers one main advantage: maintaining full ownership and control over your system.

Nevertheless, building a Retrieval-Augmented Generation (RAG) in-house is no small task. It involves a lot of technical expertise which might not always be available within a company or may change over time.

Additionally, the process of developing RAG is quite lengthy as each step, from setting up the frontend to evaluating and refining the model, requires a significant amount of time and effort to achieve acceptable results.

Another challenge lies in the resource intensity of RAG systems. Training and deploying these models require high-performance hardware and storage infrastructure, which can be quite costly to acquire and maintain, especially if yours is a small company with budget constraints.

Why buy a low code Retrieval-Augmented Generation (RAG) system for your company

Purchasing low code RAG solutions like ValueMiner RAG system comes with multiple benefits.

- A low code solution allows for quick implementation without the need of in-house development resources.

- Partnering with experienced AI providers significantly reduces technical risks and unforeseen issues.

- You do not require computational resources or specific IT skills as a low code RAG system eliminates this need.

- Outsourcing your RAG technology frees you from complex data and resource management.

- Advanced low code solutions like ValueMiner RAG systems prioritize data security, providing you features to control who has access to the company information based on their role within the company.

- A low code Retrieval Augmented Generation (RAG) solution offers easy-to-use interfaces where you can upload files and data with a single click and automatically feed your AI chatbot.

- A low code solution enables you to customize your RAG system swiftly based on your specific organizational governance and requirements.

- Low code RAG solutions are no one-size-fits all. With providers like ValueMiner, you can benefit from cost-effective pricing plans tailored for different organizational needs, ensuring maximum value for investment.

Build vs. buy RAG system: What should you choose?

The decision to build or buy depends on your specific needs and resources. Here our quick guide summarizing our considerations:

- Build in-house if you have a strong AI development team, extensive time and capacity available, and generous budget for hardware resources. Also, developing a RAG system in-house is your ideal option if you prioritize internal control, and are comfortable with a mid-term high investment.

- Buy a low code RAG system if you want your system up and running quickly, or if you lack the internal development or computational expertise. Get support from AI experts if you value maintaining control over data access and security, preventing information leaks and AI hallucinations. For a versatile low-code AI platform offering RAG integration that secures your company AI applications and that many leading companies already trust, ValueMiner is your best bet.

In conclusion, if you have gained an understanding of the difference between setting up your RAG system in-house versus relying on RAG experts and low code Retrieval Augmented Generation (RAG) systems and are looking to choose an easy-to-use, complete and secure tool, then it’s time to give ValueMiner a try.